by Chris Buskirk

America ran out of frontier when we hit the Pacific Ocean. And that changed things. Alaska and Hawaii were too far away to figure in most people’s aspirations, so for decades, it was the West Coast states and especially California that represented dreams and possibilities in the national imagination. The American dream reached its apotheosis in California. After World War II, the state became our collective tomorrow. But today, it looks more like a future that the rest of the country should avoid—a place where a few coastal enclaves have grown fabulously wealthy while everyone else falls further and further behind.

After World War II, California led the way on every front. The population was growing quickly as people moved to the state in search of opportunity and young families had children. The economy was vibrant and diverse. Southern California benefited from the presence of defense contractors. San Diego was a Navy town, and demobilized GIs returning from the Pacific Front decided to stay and put down roots. Between 1950 and 1960, the population of the Los Angeles metropolitan area swelled from 4,046,000 to 6,530,000. The Jet Propulsion Laboratory was inaugurated in the 1930s by researchers at the California Institute of Technology. One of the founders, Jack Parsons, became a prominent member of an occult sect in the late 1940s based in Pasadena that practiced “Thelemic Magick” in ceremonies called the “Babalon Working.” L. Ron Hubbard, the founder of Scientology (1950), was an associate of Parsons and rented rooms in his home. The counterculture, or rather, countercultures, had deep roots in the state.

Youth culture was born in California, arising out of a combination of rapid growth, the Baby Boom, the general absence of extended families, plentiful sunshine, the car culture, and the space afforded by newly built suburbs where teenagers could be relatively free from adult supervision. Tom Wolfe memorably described this era in his 1963 essay “The Kandy-Colored Tangerine Flake Streamline, Baby.” The student protest movement began in California too. In 1960, hundreds of protesters, many from the University of California at Berkeley, sought to disrupt a hearing of the House Un-American Activities Committee at the San Francisco City Hall. The police turned fire hoses on the crowd and arrested over thirty students. The Baby Boomers may have inherited the protest movement, but they didn’t create it. Its founders were part of the Silent Generation. Clark Kerr, the president of the UC system who earned a reputation for giving student protesters what they wanted, was from the Greatest Generation. Something in California, and in America, had already changed.

California was a sea of ferment during the 1960s—a turbulent brew of contrasting trends, as Tom O’Neill described it:

The state was the epicenter of the summer of love, but it had also seen the ascent of Reagan and Nixon. It had seen the Watts riots, the birth of the antiwar movement, and the Altamont concert disaster, the Free Speech movement and the Hells Angels. Here, defense contractors, Cold Warriors, and nascent tech companies lived just down the road from hippie communes, love-ins, and surf shops.

Hollywood was the entertainment capital of the world, producing a vision of peace and prosperity that it sold to interior America—and to the world as the beau ideal of the American experiment. It was a prosperous life centered around the nuclear family living in a single-family home in the burgeoning suburbs. Doris Day became America’s sweetheart through a series of romantic comedies, but the turbulence in her own life foreshadowed America’s turn from vitality to decay. She was married three times, and her first husband either embezzled or mismanaged her substantial fortune. Her son, Terry Melcher, was closely associated with Charles Manson and the Family, along with Dennis Wilson of the Beach Boys—avatars of the California lifestyle that epitomized the American dream.

The Manson Family spent the summer of 1968 living and partying with Wilson in his Malibu mansion. The Cielo Drive home in the Hollywood Hills where Sharon Tate and four others were murdered in August 1969 had been Melcher’s home and the site of parties that Manson attended. The connections between Doris Day’s son, the Beach Boys, and the Manson Family have a darkly prophetic valence in retrospect. They were young, good-looking, and carefree. But behind the clean-cut image of wholesome American youth was a desperate decadence fueled by titanic drug abuse, sexual outrages that were absurd even by the standards of Hollywood in the 60s, and self-destructiveness clothed in the language of pseudo-spirituality.

The California culture of the 1960s now looks like a fin-de-siècle blow-off top. The promise, fulfillment, and destruction of the American dream appears distilled in the Golden State, like an epic tragedy played out against a sunny landscape where the frontier ended. Around 1970, America entered into an age of decay, and California was in the vanguard.

Up, Up, and Away

The expectation of constant progress is deeply ingrained in our understanding of the world, and of America in particular. Some metrics do generally keep rising: gross domestic product mostly goes up, and so does the stock market. According to those barometers, things must be headed mostly in the right direction. Sure there are temporary setbacks—the economy has recessions, the stock market has corrections—but the long-term trajectory is upward. Are those metrics telling us that the country is growing more prosperous? Are they signals, or noise?

There is much that GDP and the stock market don’t tell us about, such as public and private debt levels, wage trends, and wealth concentration. In fact, during a half-century in which reported GDP grew consistently and the stock market reached the stratosphere, real wages have crept up very slowly, and living standards have flatlined or even declined for the middle and working classes. Many Americans have a feeling that things aren’t going in the right direction or that the country has lost its societal health and vigor, but aren’t sure how to describe or measure the problem. We need broader metrics of national prosperity and vitality, including measures of noneconomic values like family stability or social trust.

There are many different criteria for national vitality. First, is the country guarded against foreign aggression and at peace with itself? Are people secure in their homes, free from government harassment, and safe from violent crime? Is prosperity broadly shared? Can the average person get a good job, buy a house, and support a family without doing anything extraordinary? Are families growing? Are people generally healthy, and is life span increasing or at least not decreasing? Is social trust high? Do people have a sense of unity in a common destiny and purpose? Is there a high capacity for collective action? Are people happy?

We can sort quantifiable metrics of vitality into three main categories: social, economic, and political. There is a spiritual element too, which for my purposes falls under the social category. The social factors that can readily be measured include things like age at first marriage (an indicator of optimism about the future), median adult stature (is it rising or declining?), life expectancy, and prevalence of disease. Economic measures include real wage trends, wealth concentration, and social mobility. Political metrics relate to polarization and acts of political violence.

Many of these tend to move together over long periods of time. It’s easy to look at an individual metric and miss the forest for the trees, not seeing how it’s one manifestation of a larger problem in a dynamic system. Solutions proposed to deal with one concern may cause unexpected new problems in another part of the system. It’s a society-wide game of whack-a-mole. What’s needed is a more comprehensive understanding of structural trends and what lies behind them. From the founding period in America until about 1830, those factors were generally improving. Life expectancy and median height were increasing, both indicating a society that was mostly at peace and had plentiful food. Real wages roughly tripled during this period as labor supply growth was slow. There was some political violence. But for decades after independence, the country was largely at peace and citizens were secure in their homes. There was an overarching sense of shared purpose in building a new nation.

Those indicators of vitality are no longer trending upward. Let’s start with life expectancy. There is a general impression that up until the last century, people died very young. There’s an element of truth to this: we are now less susceptible to death from infectious disease, especially in early childhood, than were our ancestors before the 20th century. Childhood mortality rates were appalling in the past, but burying a young child is now a rare tragedy. This is a very real form of progress, resulting from more reliable food supplies as a result of improvements in agriculture, better sanitation in cities, and medical advances, particularly the antibiotics and certain vaccines introduced in the first half of the 20th century. A period of rapid progress was then followed by a long period of slow, expensive improvement at the margins.

When you factor out childhood mortality, life spans have not grown by much in the past century or two. A study in the Journal of the Royal Society of Medicine says that in mid-Victorian England, life expectancy at age five was 75 for men and 73 for women. In 2016, according to the Social Security Administration, the American male life expectancy at age five was 71.53 (which means living to age 76.53). Once you’ve made it to five years, your life expectancy is not much different from your great-grandfather’s. Moreover, Pliny tells us that Cicero’s wife, Terentia, lived to 103. Eleanor of Aquitaine, queen of both France and England at different times in the 12th century, died a week shy of her 82nd birthday. A study of 298 famous men born before 100 B.C. who were not murdered, killed in battle, or died by suicide found that their average age at death was 71.

More striking is that people who live completely outside of modern civilization without Western medicine today have life expectancies roughly comparable to our own. Daniel Lieberman, a biological anthropologist at Harvard, notes that “foragers who survive the precarious first few years of infancy are most likely to live to be 68 to 78 years old.” In some ways, they are healthier in old age than the average American, with lower incidences of inflammatory diseases like diabetes and atherosclerosis. It should be no surprise that an active life spent outside in the sun, eating wild game and foraged plants, produces good health.

Recent research shows that not only are we not living longer, we are less healthy and less mobile during the last decades of our lives than our great-grandfathers were. This points to a decline in overall health. We have new drugs to treat Type I diabetes, but there is more Type I diabetes than in the past. We have new treatments for cancer, but there is more cancer. Something has gone very wrong. What’s more, between 2014 and 2017, median American life expectancy declined every year. In 2017 it was 78.6 years, then it decreased again between 2018 and 2020 to 76.87. The figure for 2020 includes COVID deaths, of course, but the trend was already heading downward for several years, mostly from deaths of despair: diseases associated with chronic alcoholism, drug overdoses, and suicide. The reasons for the increase in deaths of despair are complex, but a major contributing factor is economic: people without good prospects over an extended period of time are more prone to self-destructive behavior. This decline is in contrast to the experience of peer countries.

In addition to life expectancy, other upward trends have stalled or reversed in the past few decades. Family formation has slowed. The total fertility rate has dropped to well below replacement level. Real wages have stagnated. Debt levels have soared. Social mobility has stalled and income inequality has grown. Material conditions for most people have improved little except in narrow parts of life such as entertainment.

Trends, Aggregate, and Individuals

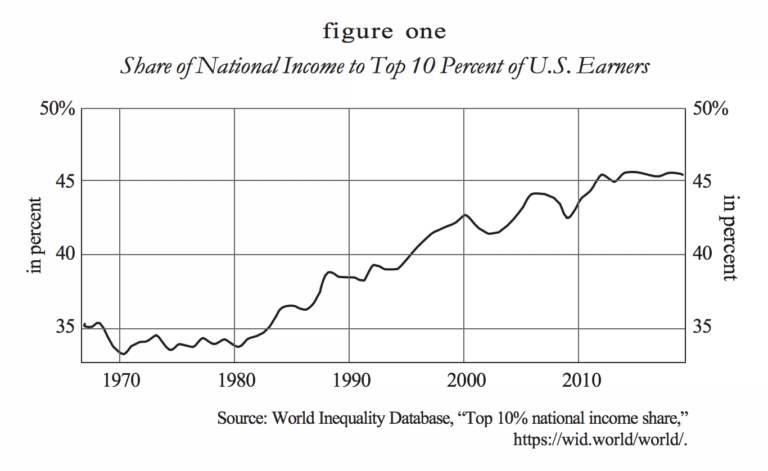

The last several decades have been a story of losing ground for much of middle America, away from a handful of wealthy cities on the coasts. The optimistic story that’s been told is that both income and wealth have been rising. That’s true in the aggregate, but when those numbers are broken down the picture is one of a rising gap between a small group of winners and a larger group of losers. Real wages have remained essentially flat over the past 50 years, and the growth in national wealth has been heavily concentrated at the top. The chart below represents the share of national income that went to the top 10 percent of earners in the United States. In 1970 it was 33.3 percent; in 2019 the figure was 45.4 percent.

Disparities in wealth have become more closely tied to educational attainment. Between 1989 and 2019, household wealth grew the most for those with the highest level of education. For households with a graduate degree, the increase was 31 percent; with a college degree, it was 17 percent; with a high school degree, about 4 percent. Meanwhile, household wealth declined by a precipitous 60 percent for high school dropouts, including those with a GED. In 1989, households with a college degree had 2.74 times the wealth of those with only a high school diploma; in 2012 it was 3.08 times as much. In 1989, households with a graduate degree had 4.85 times the wealth of the high school group; in 2019, it was 6.12 times as much. The gap between the graduate degree group and the college group increased by 12 percent. The high school group’s wealth grew about 4 percent from 1989 to 2019, the college group’s wealth grew about 17 percent, and the wealth of the graduate degree group increased 31 percent. The gaps between the groups are growing in real dollars. It’s true that people have some control over the level of education they attain, but college has become costlier, and it’s fundamentally unnecessary for many jobs, so the growing wealth disparity by education is a worrying trend.

Wealth is relative: if your wealth grew by 4 percent while that of another group increased by 17 percent, then you are poorer. What’s more crucial, however, is purchasing power. If the costs of middle-class staples like healthcare, housing, and college tuition are climbing sharply while wages stagnate, then living standards will decline.

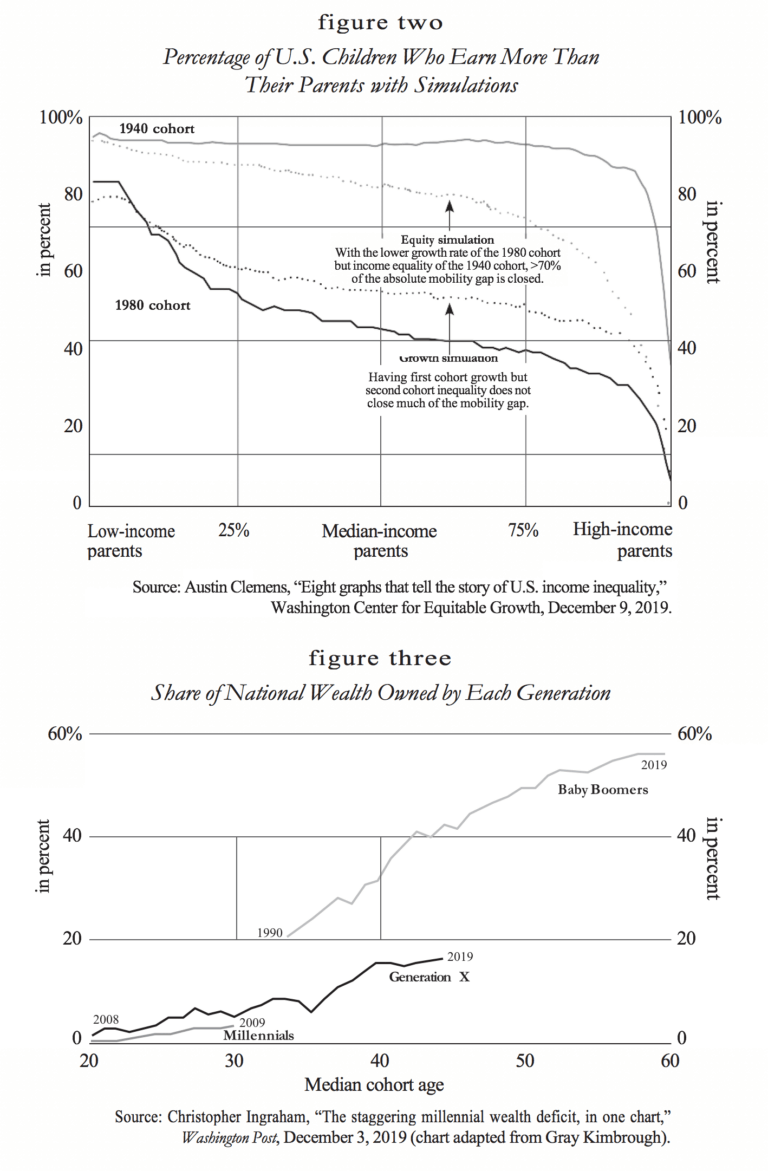

More problematic than growing wealth disparity in itself is diminishing economic mobility. A big part of the American story from the beginning has been that children tend to end up better off than their parents were. By most measures, that hasn’t been true for decades.

The chart below compares the birth cohorts of 1940 and 1980 in terms of earning more than parents did. The horizontal axis indicates the relative income level of the parents. Among the older generation, over 90 percent earned more than their parents, except for those whose parents were at the very high end of the income scale. Among the younger generation, the percentages were much lower, and also more variable. For those whose parents had a median income, only about 40 percent would do better. In this analysis, low growth and high inequality both suppress mobility.

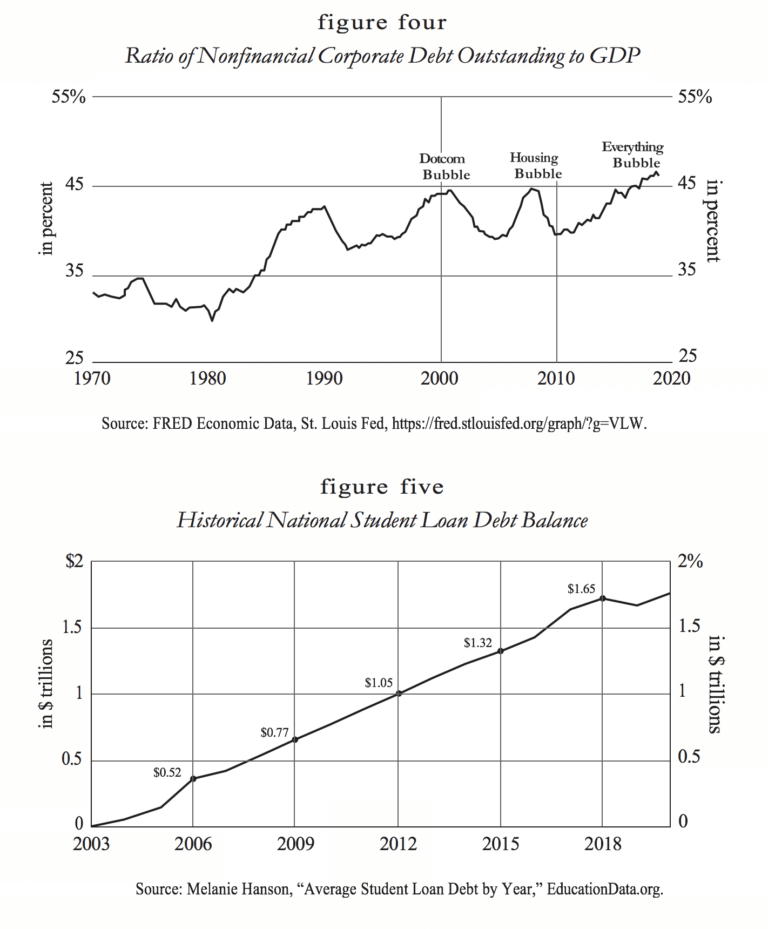

Over time, declining economic mobility becomes an intergenerational problem, as younger people fall behind the preceding generation in wealth accumulation. The graph below illustrates the proportion of the national wealth held by successive generations at the same stage of life, with the horizontal axis indicating the median age for the group. Baby Boomers (birth years 1946–1964) owned a much larger percentage of the national wealth than the two succeeding generations at every point.

At a median age of 45, for example, the Boomers owned approximately 40 percent of the national wealth. At the same median age, Generation X (1965–1980) owned about 15 percent. The Boomer generation was 15–18 percent larger than Gen X and it had 2.67 times as much of the national wealth. The Millennial generation (1981–1996) is bigger than Gen X though a little smaller than the Boomers, and it has owned about half of what Gen X did at the same median age.

Those are some measurable indicators of the nation’s vitality, and they tell us that something is going wrong. A key reason for stagnant wages, declining mobility, and growing disparities of wealth is that economic growth overall has been sluggish since around 1970. And the main reason for slower growth is that the long-term growth in productivity that created so much wealth for America and the world over the prior two centuries slowed down.

Wealth and the New Frontier

There are other ways to increase the overall national wealth. One is by acquiring new resources, which has been done in various ways: through territorial conquest, or the incorporation of unsettled frontier lands, or the discovery of valuable resources already in a nation’s territory, such as petroleum reserves in recent history. Getting an advantageous trade agreement can also be a way of increasing resources.

Through much of American history, the frontier was a great source of new wealth. The vast supply of mostly free land, along with the other resources it held, was not just an economic boon; it also shaped American culture and politics in ways that were distinct from the long-settled countries of Europe where the frontier had been closed for centuries and all the land was owned space.

But there can be a downside to becoming overly dependent on any one resource. Aside from gaining new resources, real economic growth comes from either population growth or productivity growth. Population growth can add to the national wealth, but it can also put strain on supplies of essential resources. What elevates living standards broadly is productivity growth, making more out of available resources. A farmer who tills his fields with a steel plough pulled by a horse can cultivate more land than a farmer doing it by hand. It allows him to produce more food that can be consumed by a bigger family, or the surplus can be sold or traded for other goods. A farmer driving a plough with an engine and reaping with a mechanical combine can produce even more.

But productivity growth is driven by innovation. In the example above, there is a progression from farming by hand with a simple tool, to the use of metal tools and animal power, to the use of complicated machinery, each of which greatly increases the amount of food produced per farmer. This illustrates the basic truth that technology is a means of reducing scarcity and generating surpluses of essential goods, so labor and resources can be put toward other purposes, and the whole population will be better off.

Total factor productivity (TFP) refers to economic output relative to the size of all primary inputs, namely labor and capital. Over time, a nation’s economic output tends to grow faster than its labor force and capital stock. This might owe to better labor skills or capital management, but it is primarily the result of new technology. In economics, productivity growth is used as a proxy for the application of innovation. If productivity is rising, it is understood to mean that applied science is working to reduce scarcity. The countries that lead in technological innovation naturally reap the benefits first and most broadly, and therefore have the highest living standards. Developing countries eventually get the technology too, and then enjoy the benefits in what is called catch-up growth. For example, China first began its national electrification program in the 1950s, when electricity was nearly ubiquitous in the United States. The project took a few decades to complete, and China saw rapid growth as wide access to electric power increased productivity.

The United States still leads the way in innovation—though now with more competition than at any time since World War II. But the development of productivity-enhancing new technologies has been slower over the past few decades than in any comparable span of time since the beginning of the Industrial Revolution in the early 18th century. The obvious advances in a few specific areas, particularly digital technology, are exceptions that prove the rule. The social technologies of recent years facilitate consumption rather than production.As a result, growth in total factor productivity has been slow for a long time. According to a report from Rabobank, “TFP growth deteriorated from an average annual growth of 1.1% over the period 1969–2010 to 0.4% in 2010 to 2018.”

In The Great Stagnation, Tyler Cowen suggested that the conventional productivity measures may be misleading. For example, he noted that productivity growth through 2000–2004 averaged 3.8 percent, a very high figure and an outlier relative to most of the last half-century. Surely some of that growth was real owing to the growth of the internet at the time, but it also coincided with robust growth in the financial sector, which ended very badly in 2008.

“What we measured as value creation actually may have been value destruction, namely too many homes and too much financial innovation of the wrong kind.” Then, productivity shot up by over 5 percent in 2009–2010, but Cohen found that it was mostly the result of firms firing the least productive people. That may have been good business, but it’s not the same as productivity rising because innovation is reducing scarcity and thus leading to better living standards. Over the long term, when productivity growth slows or stalls, overall economic growth is sluggish. Median real wage growth is slow. For most people, living standards don’t just stagnate but decline.

You Owe Me Money

As productivity growth has slowed, the economy has become more financialized, which means that resources are increasingly channeled into means of extracting wealth from the productive economy instead of producing goods and services. Peter Thiel said that a simple way to understand financialization is that it represents the increasing influence of companies whose main business or source of value is producing little pieces of paper that essentially say, you owe me money. Wall Street and the companies that make up the financial sector have never been larger or more powerful. Since the early 1970s, financial firms’ share of all corporate earnings has roughly doubled to nearly 25 percent. As a share of real GDP, it grew from 13–15 percent in the early 1970s to nearly 22 percent in 2020.

The profits of financial firms have grown faster than their share of the economy over the past half-century. The examples are everywhere. Many companies that were built to produce real-world, nondigital goods and services have become stealth finance companies, too. General Electric, the manufacturing giant founded by Thomas Edison, transformed itself into a black box of finance businesses, dragging itself down as a result. The total market value of major airlines like American, United, and Delta is less than the value of their loyalty programs, in which people get miles by flying and by spending with airline-branded credit cards. In 2020, American Airlines’ loyalty program was valued at $18–$30 billion while the market capitalization of the entire company was $14 billion. This suggests that the actual airline business—flying people from one place to another—is valuable only insofar as it gets people to participate in a loyalty program.

The main result of financialization is best explained by the “Cantillon effect,” which means that money creation, over a long period of time, redistributes wealth upward to the already rich. This effect was first described in the 18th century by Richard Cantillon after he observed the results of introducing a paper money system. He noted that the first people to receive the new money saw their incomes rise, while the last to receive it saw a decline in their purchasing power because of consumer price inflation. The first to receive newly created money are banks and other financial institutions. They are called “Cantillon insiders,” a term coined by Nick Szabo, and they get the most benefit. But all owners of assets—including stocks, real estate, even a home—are enriched to some extent by the Cantillon effect. Those who own a lot of assets benefit the most, and financial assets tend to increase in value faster than other types, but all gain value. This is a version of the Matthew Principle, taken from Jesus’ Parable of the Sower: to those who have, more will be given. The more assets you own, the faster your wealth will increase.

Meanwhile, the people without assets fall behind as asset prices rise faster than incomes. Inflation hawks have long worried that America’s decades-long policy of running large government deficits combined with easy money from the Fed will lead to runaway inflation that beggars average Americans. This was seen clearly in 2022 after the massive increase in dollars created by the Fed in 2020 and 2021.

Even so, they’ve mostly been looking for inflation in the wrong place. It’s true that the prices of many raw materials, such as lumber and corn, have soared recently, followed by much more broad-based inflation in everything from food to rent, but inflation in the form of asset price bubbles has been with us for much longer. Those bubbles pop and prices drop, but the next bubble raises them even higher. Asset price inflation benefits asset owners, but not the people with few or no assets, like young people just starting out and finding themselves unable to afford to buy a home.

The Cantillon effect has been one of the main vectors of increased wealth concentration over the last 40 years. One way that the large banks use their insider status is by getting short-term loans from the Federal Reserve and lending the money back to the government by buying longer-term treasuries at a slightly higher interest rate and locking in a profit.

Their position in the economy essentially guarantees them profits, and their size and political influence protect them from losses. We’ve seen the pattern of private profits and public losses clearly in the savings and loan crisis of the 1980s, and in the financial crisis of 2008. Banks and speculators made a lot of money in the years leading up to the crisis, and when the losses on their bad loans came due, they got bailouts.

Moral Hazard

The Cantillon economy creates moral hazard in that large companies, especially financial institutions, can privatize profits and socialize losses. Insiders, and shareholders more broadly, can reap massive gains when the bets they make with the company’s capital pay off. When the bets go bad, the company gets bailed out. Alan Krueger, the chief economist at theTreasury Department in the Obama Administration, explained years later why banks and not homeowners were rescued from the fallout of the mortgage crisis: “It would have been extremely unfair, and created problems down the road to bail out homeowners who were irresponsible and took on homes they couldn’t afford.” Krueger glossed over the fact that the banks had used predatory and deceptive practices to initiate risky loans, and when they lost hundreds of billions of dollars—or trillions by some estimates—they were bailed out while homeowners were kicked out. That callous indifference alienates and radicalizes the forgotten men and women who have been losing ground.

Most people know about the big bailouts in 2008, but the system that joins private profit with socialized losses regularly creates incentives for sloppiness and corruption. The greed sometimes takes ridiculous forms. But once that culture takes over, it poisons everything it touches. Starting in 2002, for example, Wells Fargo began a scam in which it paid employees to open more than 3.5 million unauthorized checking accounts, savings accounts, and credit cards for retail customers. By exaggerating growth in the number of active retail accounts, the bank could give investors a false picture of the health of its retail business. It also charged those customers monthly service fees, which contributed to the bottom line and bolstered the numbers in quarterly earnings reports to Wall Street. Bigger profits led to higher stock prices, enriching senior executives whose compensation packages included large options grants.

John Stumpf, the company’s CEO from 2007 to 2016, was forced to resign and disgorge around $40 million in repayments to Wells Fargo and fines to the federal government. Bloomberg estimates that he retained more than $100 million. Wells Fargo paid a $3 billion fine, which amounted to less than two months’ profit, as the bank’s annual profits averaged around $19.7 billion from 2017 to 2019. And this was for a scam that lasted nearly 15 years.

What is perhaps most absurd and despicable about this scheme is that Wells Fargo was conducting it during and even after the credit bubble, when the bank received billions of dollars in bailouts from the government. The alliance between the largest corporations and the state leads to corrupt and abusive practices. This is one of the second-order effects of the Cantillon economy.

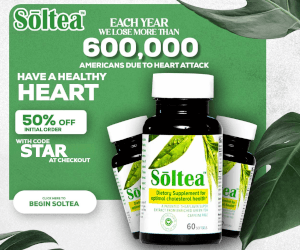

Another effect is that managers respond to short-term financial incentives in a way that undermines the long-term vitality of their own company. An excessive focus on quarterly earnings is sometimes referred to as short-termism. Senior managers, especially at the C-suite level of public companies, are largely compensated with stock options, so they have a strong incentive to see the stock rise. In principle, a rising stock price should reflect a healthy, growing, profitable company. But managers figured out how to game the system: with the Fed keeping long-term rates low, corporations can borrow money at a much lower rate than the expected return in the stock market. Many companies have taken on long-term debt to finance stock repurchases, which helps inflate the stock price. This practice is one reason that corporate debt has soared since 1980.

The Cantillon effect distorts resource allocation, incentivizing rent-seeking in the financial industry and rewarding nonfinancial companies for becoming stealth financial firms. Profits are quicker and easier in finance than in other industries. As a result, many smart, ambitious people go to Wall Street instead of trying to invent useful products or seeking a new source of abundant power—endeavors that don’t have as much assurance of a payoff. How different might America be if the incentives were structured to reward the people who put their brain power and energy into those sorts of projects rather than into quantitative trading algorithms and financial derivatives of home mortgages.

While the financial industry does well, the manufacturing sector lags. Because of COVID-19, Americans discovered that the United States has very limited capacity to make the personal protective equipment that was in such urgent demand in 2020. We do not manufacture any of the most widely prescribed antibiotics, or drugs for heart disease or diabetes, nor any of the chemical precursors required to make them. A close look at other vital industries reveals the same penury. The rare earth minerals necessary for batteries and electronic screens mostly come from China because we have intentionally shuttered domestic sources or failed to develop them. We’re dependent on Taiwan for the computer chips that go into everything from phones to cars to appliances, and broken supply chains in 2021 led to widespread shortages. The list of necessities we import because we have exported our manufacturing base goes on.

Financialization of the economy amplifies the resource curse that has come with dollar supremacy. Richard Cantillon described a similar effect when he observed what happened to Spain and Portugal when they acquired large amounts of silver and gold from the New World. The new wealth raised prices, but it went largely into purchasing imported goods, which ruined the manufactures of the state and led to general impoverishment. In America today, a fiat currency that serves as the world’s reserve is the resource curse that erodes the manufacturing base while the financial sector flourishes. Since the dollar’s value was formally dissociated from gold in 1976, it now rests on American economic prosperity, political stability, and military supremacy. If these advantages diminish relative to competitors, so will the value of the dollar.

Dollar supremacy has also encouraged a debt-based economy. Federal debt as a share of GDP has risen from around 38 percent in 1970 to nearly 140 percent in 2020. Corporate debt has had peaks and troughs over those decades, but each new peak is higher than the last. In the 1970s, total nonfinancial corporate debt in the United States ranged between 30 and 35 percent of GDP. It peaked at about 43 percent in 1990, then at 45 percent with the dot-com bubble in 2001, then at slightly higher with the housing bubble in 2008, and now it’s approximately 47 percent. As asset prices have climbed faster than wages, consumer debt has soared from 43.2 percent of GDP in 1970 to over 75 percent in 2020.

Student loan debt has soared even faster in recent years: in 2003, it totaled $240 billion—basically a rounding error—but by 2020, the sum had ballooned to six times as large, at $1.68 trillion, which amounts to around 8 percent of GDP. Increases in aggregate debt throughout society are a predictable result of the Cantillon effect in a financialized economy.

The Rise of the Two-Income Family

The Cantillon effect generates big gains for those closest to the money spigot, and especially those at the top of the financial industry, while the people furthest away fall behind. Average families find it more difficult to buy a home and maintain a middle-class life. In 90 percent of U.S. counties today, the median-priced single-family home is unaffordable on the median wage. One of the ways that families try to make ends meet is with the promiscuous use of credit. It’s one of the reasons that personal and household debt levels have risen across the board. People borrow money to cover the gap between expectations and reality, hoping that economic growth will soon pull them out of debt. But for many, it’s a trap they can never escape.

Another way that families have tried to keep up is by adding a second income. In 2018, over 60 percent of families were two-income households, up from about 30 percent in 1970. This change is not a result of a simple desire to do wage work outside the home or of “increased opportunities,” as we are often told. The reason is that it now takes two incomes to support the needs of a middle-class family, whereas 50 years ago, it required only one. As more people entered the labor market, the value of labor declined, setting up a vicious cycle in which a second income came to be more necessary. China’s entry into the World Trade Organization in 2001 put more downward pressure on the value of labor.

When people laud the fact that we have so many more two-income families—generally meaning more women working outside the home—as evidence that there are so many great opportunities, what they’re really doing is retconning something usually done out of economic necessity. Needing twice as much labor to get the same result is the opposite of what happens when productivity growth is robust. It also means that the raising of children is increasingly outsourced. That’s not an improvement.

Another response to stagnant wages is to delay family formation and have fewer children. In 1960, the median age of a first marriage was about 20.5 years. In 2010, it was approximately 27, and in 2020 it was an all-time high of over 29.18 At the same time, the total fertility rate of American women was dropping: from 3.65 in 1960 down to 2.1, a little below replacement level, in the early 1970s. Currently, it hovers around 1.8. Some people may look on this approvingly, worried as they are about overpopulation and the impact of humans on the environment. But when people choose to have few or no children, it is usually not a political choice. That doesn’t mean it is simply a “revealed preference,” a lower desire for a family and children, rather than a reflection of personal challenges or how people view their prospects for the future. Surely it’s no coincidence that the shrinking of families has happened at the same time that real wages have stagnated or grown very slowly, while the costs of housing, health care, and higher education have soared.

The fact that American living standards have broadly stagnated, and for some segments of the population have declined, should be cause for real concern to the ruling class. Americans expect economic mobility and a chance for prosperity. Without it, many will believe that the government has failed to deliver on its promises. The Chinese Communist Party is regarded as legitimate by the Chinese people because it has presided over a large, broad, multigenerational rise in living standards. If stagnation or decline in the United States is not addressed effectively, it will threaten the legitimacy of the governing institutions.

But instead of meeting the challenge head-on, America’s political and business leaders have pursued policies and strategies that exacerbate the problem. Woke policies in academia, government, and big business have created a stultifying environment that is openly hostile to heterodox views. Witness the response to views on COVID that contradicted official opinion. And all this happens against a backdrop of destructive fiscal and monetary policies.

Low growth and low mobility tend to increase political instability when the legitimacy of the political order is predicated upon opportunity and egalitarianism. One source of national unity has been the understanding that every individual has an equal right to pursue happiness, that a dignified life is well within reach of the average person, and that the possibility of rising higher is open to all. When too many people feel they cannot rise, and when even the basics of a middle-class life are difficult to secure, disappointment can breed a sense of injustice that leads to social and political conflict. At first, that conflict acts as a drag on what American society can accomplish. Left unchecked, it will consume energy and resources that could otherwise be put into more productive activities. Thwarted personal aspirations are often channeled into politics and zero-sum factional conflict. The rise of identity politics represents a redirection of the frustrations born of broken dreams. But identity politics further divides us into hostile camps.

We’ve already seen increased social unrest lately, and more is likely to follow. High levels of social and political conflict are dangerous for a country that hopes to maintain a popular form of government. Not so long ago, we could find unity in civic rituals and were encouraged to be proud of our country. Now our history is denigrated in schools and by other sensemaking institutions, leading to cultural dysphoria, social atomization, and alienation. In exchange, you can choose your pronouns, which doesn’t seem like such a great trade. Just as important as regaining broad-based material prosperity and rising standards of living—perhaps more important—is unifying the nation around a common understanding of who we Americans are and why we’re here.

– – –

Chris Buskirk is a contributor to American Greatness.

Photo “Hollywood” by Thomas Wolf. CC BY-SA 3.0.